Jie Wang (@JieWang_ZJUI)

2026-01-13 | ❤️ 355 | 🔁 51

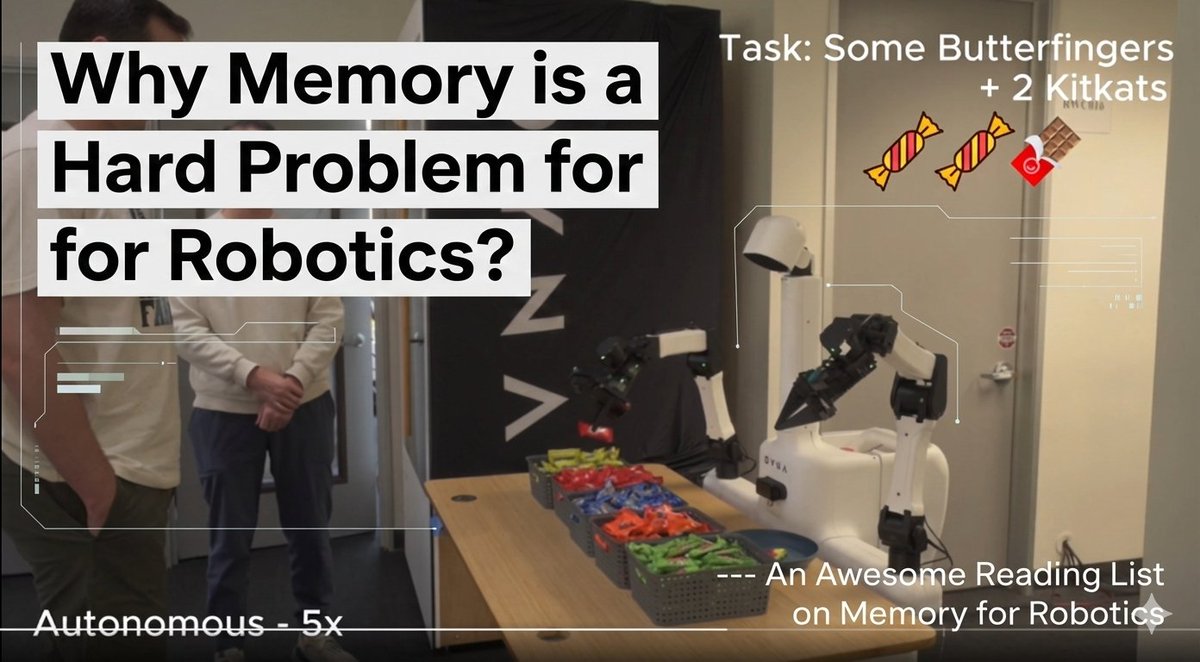

VLAs nowadays enable robotic manipulation to perform impressive tasks like folding clothes, making coffee, and cleaning dishes. However, surprisingly, most VLAs lack memory. Unlike their close relatives LLMs, VLAs have no context window and no access to history. This causes them to repeatedly fail in the same way without learning from online experience.

But why? Why not simply extend the context window like LLMs? It’s not that we don’t want to — it’s because it’s extremely difficult. Here, I share a talk by @chelseabfinn at NeurIPS that scope the challenges in developing long-horizon autonomy for embodied agents. At the end, there’s a reading list on memory for robotics. ⭐

미디어

🔗 Related

Auto-generated - needs manual review