Andrei Bursuc (@abursuc)

2024-10-25 | ❤️ 153 | 🔁 18

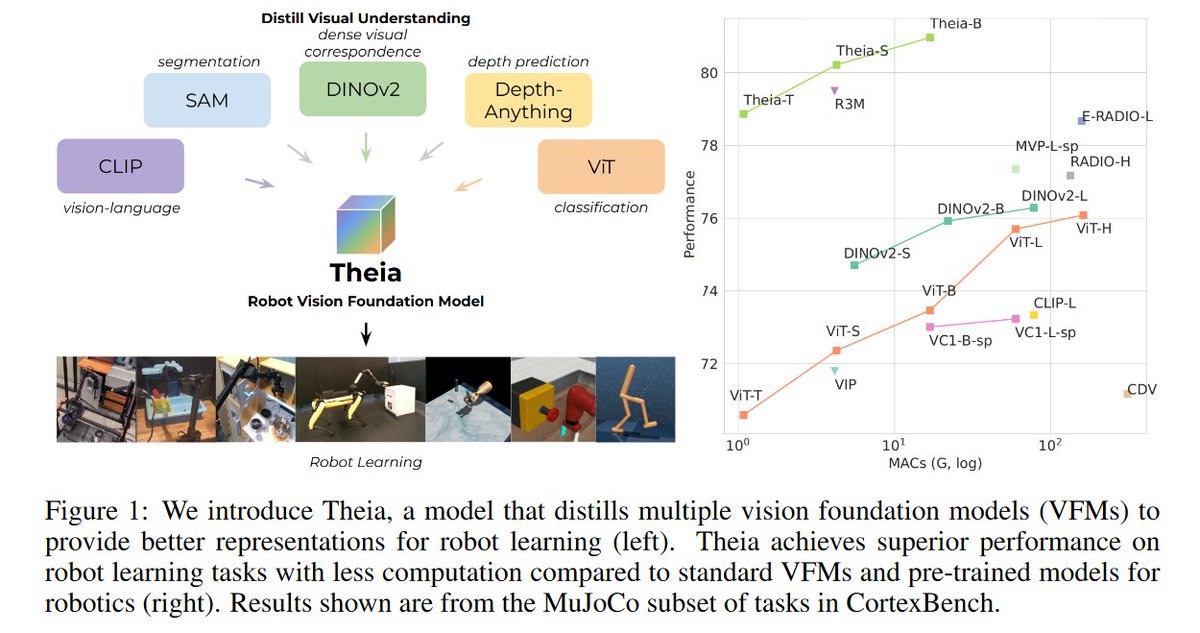

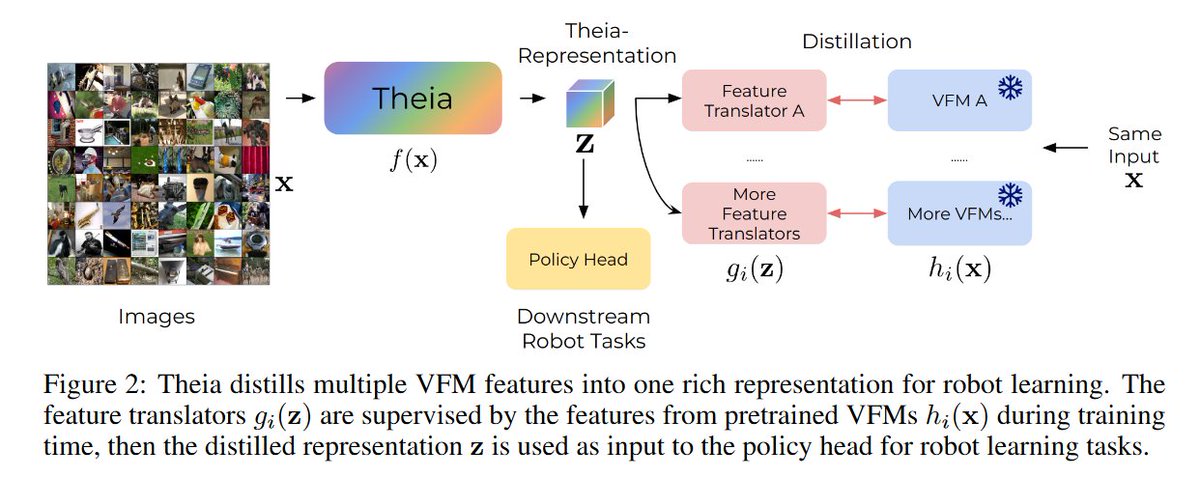

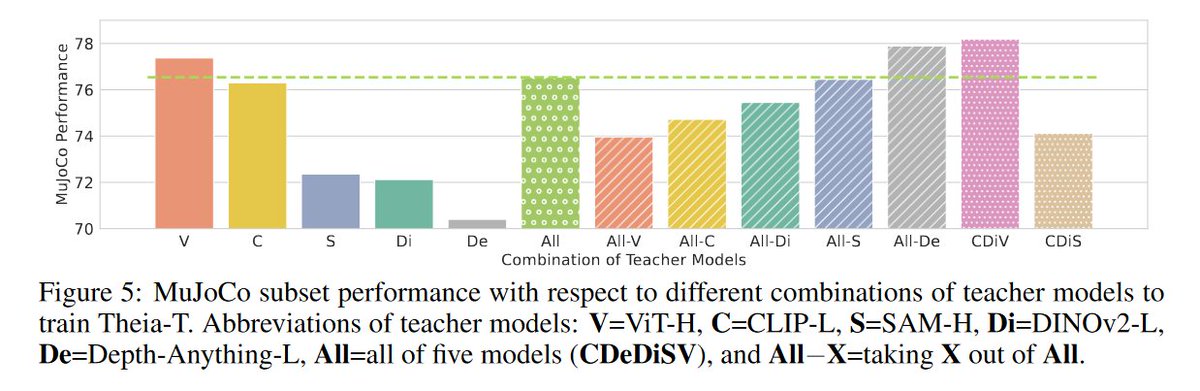

If you liked UNIC, check out Theia: a simple approach for distilling pretrained models, e.g.,CLIP, DINOv2, SAM, DepthAnything into a unified model with improved performance on policy learning https://theia.theaiinstitute.com/ https://x.com/abursuc/status/1849945966536950080/video/1

미디어

인용

[@abursuc]: Excellent work by @mbsariyildiz et al. on distilling multiple complementary visual encoders into a single one. This is particularly useful in the era of pretrained foundation models on different data…

인용 트윗

Andrei Bursuc (@abursuc)

Excellent work by @mbsariyildiz et al. on distilling multiple complementary visual encoders into a single one. This is particularly useful in the era of pretrained foundation models on different datasets and with different types of supervision 👇