Anand Bhattad (@anand_bhattad)

2025-03-01 | ❤️ 65 | 🔁 9

[1/3] ZeroComp is being presented as an Oral today at WACV2025!

Session Time: 2 PM local time (generative models)

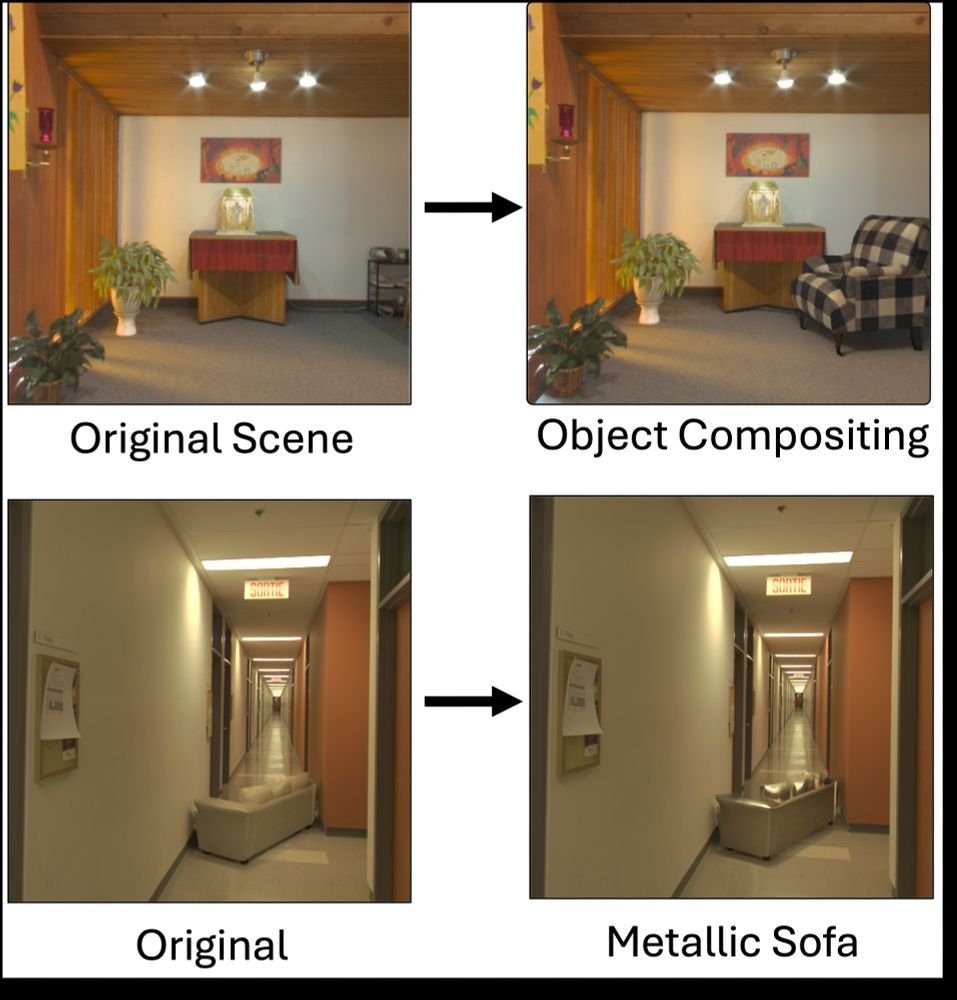

We train a diffusion model as a neural renderer that takes intrinsic images as input, enabling zero-shot object compositing (e.g., inserting an armchair) without ever seeing paired scenes with and without objects during training. This approach naturally extends to object editing tasks, like material changes (e.g., transforming a sofa into a metallic one), all in a zero-shot manner.

미디어

요약

WACV 2025에서 ZeroComp가 구두 발표로 소개됐다. 내재 이미지 입력 기반 확산 신경 렌더러로, 짝지어진 학습 데이터 없이도 제로샷 객체 합성·재질 편집(예: 소파를 금속 재질로 변경)을 수행한다.

🔗 Related

Auto-generated - needs manual review